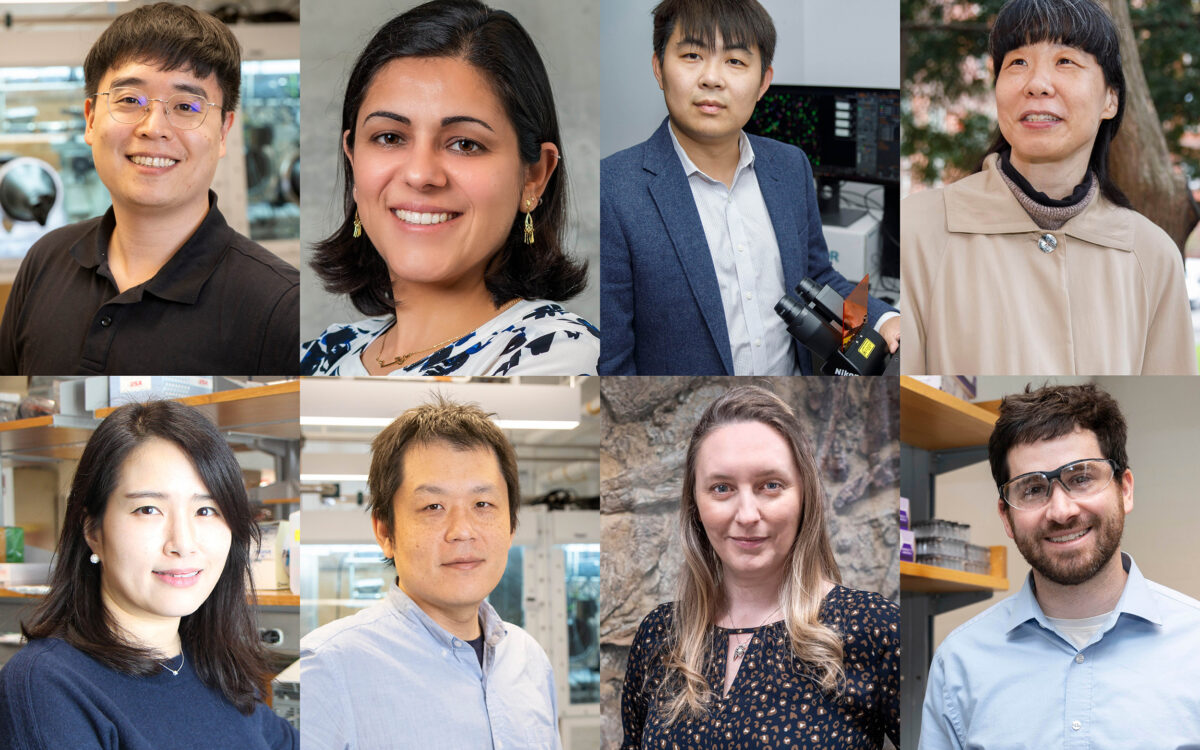

“Face-to-face conversation is where intimacy and empathy develop,” says Sherry Turkle.

Niles Singer/Harvard Staff Photographer

Lifting a few with my chatbot

Sociologist Sherry Turkle warns against growing trend of turning to AI for companionship, counsel

Artificial intelligence holds the promise of optimizing many aspects of life, from education and medical research to business and the arts. But some applications may have a deeply worrisome effect on human relationships.

During a talk March 20 at Harvard Law School, MIT sociologist Sherry Turkle, whose books include “Reclaiming Conversation” and “The Empathy Diaries,” outlined her concerns over the fact that individuals are starting to turn to generative AI chatbots to ease loneliness, a rising public health dilemma across the nation. The technology is not solving this problem but adding to it by warping our ability to empathize with others and to appreciate the value of real interpersonal connection, she said.

Turkle, also a trained psychotherapist, said it’s “the greatest assault on empathy” she’s ever seen.

Many already try to avoid face-to-face interactions in favor of texting or social media out of fear of rejection, or feeling uncomfortable about where things will go. “In my research, the most common thing that I hear is ‘I’d rather text than talk.’ People want, whenever possible, to keep their social interactions on the screen,” she said. “Why? It’s because they feel less vulnerable.”

But the convenience and ease of text and chat belie the harms caused when digital technology becomes the primary medium through which people connect with family and friends, meet prospective dates, or find someone with whom to share worries and feelings.

“Face-to-face conversation is where intimacy and empathy develop,” she said. “At work, conversation fosters productivity, engagement, and clarity and collaboration.”

“In my research, the most common thing that I hear is ‘I’d rather text than talk.’ Why? It’s because they feel less vulnerable.”

Sherry Turkle

Now, AI chatbots serve as therapists and companions, providing a second-rate sense of connection, or what Turkle calls artificial intimacy.

They offer a simulated, hollowed-out version of empathy, she said. They don’t understand — or care — about what the user is going through. They’re designed to keep them happily engaged, and providing simulated empathy is just a means to that end.

Based on her research, Turkle said many people surprisingly seem to find pretend empathy fairly satisfying even though they realize that it’s not authentic.

“They say, ‘People disappoint; they judge you; they abandon you; the drama of human connection is exhausting,’” she said, whereas, “Our relationship with a chatbot is a sure thing. It’s always there day and night.”

Tech executives and technologists who work with generative AI seem to share a common belief that because technology’s responses in an interaction are rooted in big data, it will always outperform human beings in providing satisfaction regardless of their expertise or training, Turkle said.

But vast quantities of data averaged to produce a probable or popular response doesn’t work for human psychology. What leads to lasting change is not a therapist delivering curated bits of information, “but from the nurturing of a relationship,” she said, noting that major healthcare corporations are now considering using chatbot therapists as the first option for patients in part because demand for human therapists far exceeds supply.

Society needs to stop and assess whether AI is molding us into the people we want to be, she said.

“The technology challenges us to assert ourselves and our human values, which means that we have to figure out what those values are — which is not very easy,” said Turkle. “I think that conversation needs to really start now. This is really a moment of inflection.”